I'm a research engineer working in machine learning and robotics. I did my Ph.D. in the Computer Science department at the University of Southern California, where I worked in the Robotic Embedded Systems Lab with Gaurav Sukhatme. I also have a Masters degree in CS from USC and I got my B.S. in Engineering from Harvey Mudd College.

I took the photo above on a dark night in Joshua Tree National Park.

Contact

You can e-mail me by putting my first initial and last name in front of @gmail.com

Projects

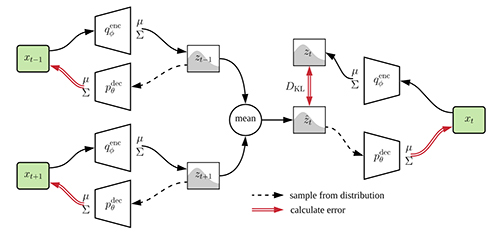

Plan-Space State Embedding

Robot control problems are often structured with a policy function that maps state values into control values, but learning that function, such as through reinforcement learing, can often be very difficult or unreliable. In this project I have explored ways to distill insights from demonstrations into an embedding space for the robot state that has benefits for the performance and reliability of subsequent learning problems. [4] [arXiv]

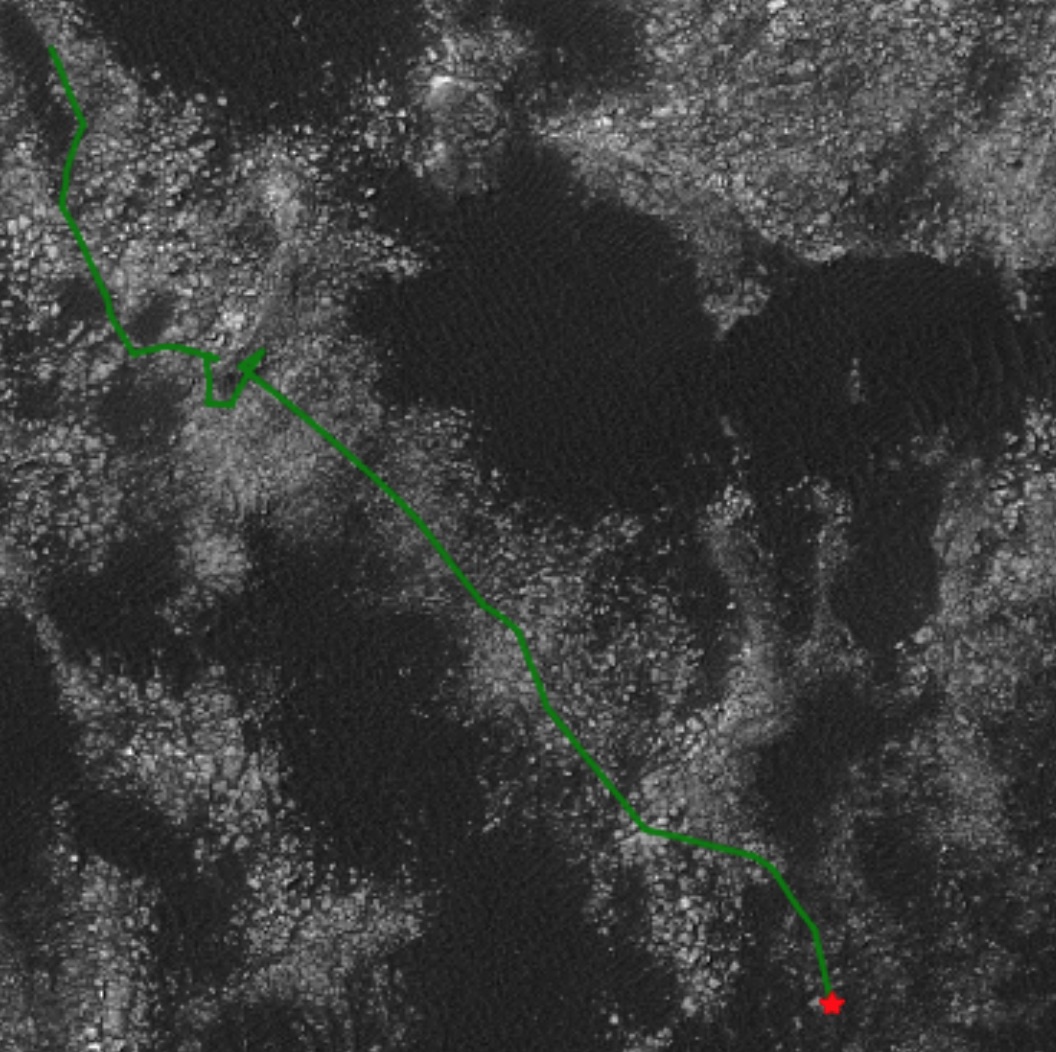

Rover-IRL

Planning for planetary rovers from orbital data is hard. In this project I've developed a new architecture for using value iteration networks to turn the rover path planning problem into an inverse reinforcement learning problem, where we try to learn what traversible terrain looks like from path demonstrations and do from orbital data what was previously done from surface imagery, thus enabling path planning at longer ranges and in more places. [1] [PDF] [Website]

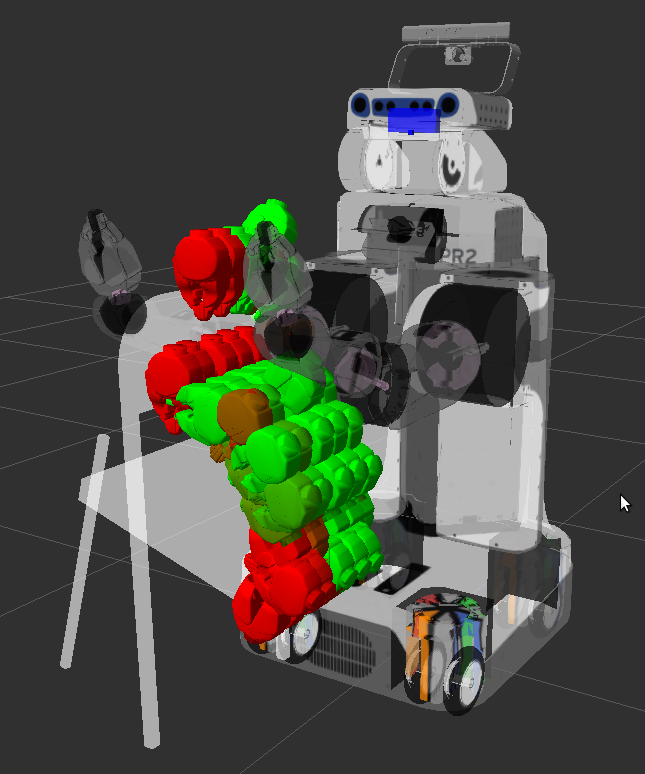

Multi-Step Planning

This project looks at solving combined task and motion planning problems using a multi-step planning architecture. We've demonstrated the effectiveness of this approach in simulated and real environments, for problems such as tabletop pick and place, and manipulating an articulated folding chair. [2]

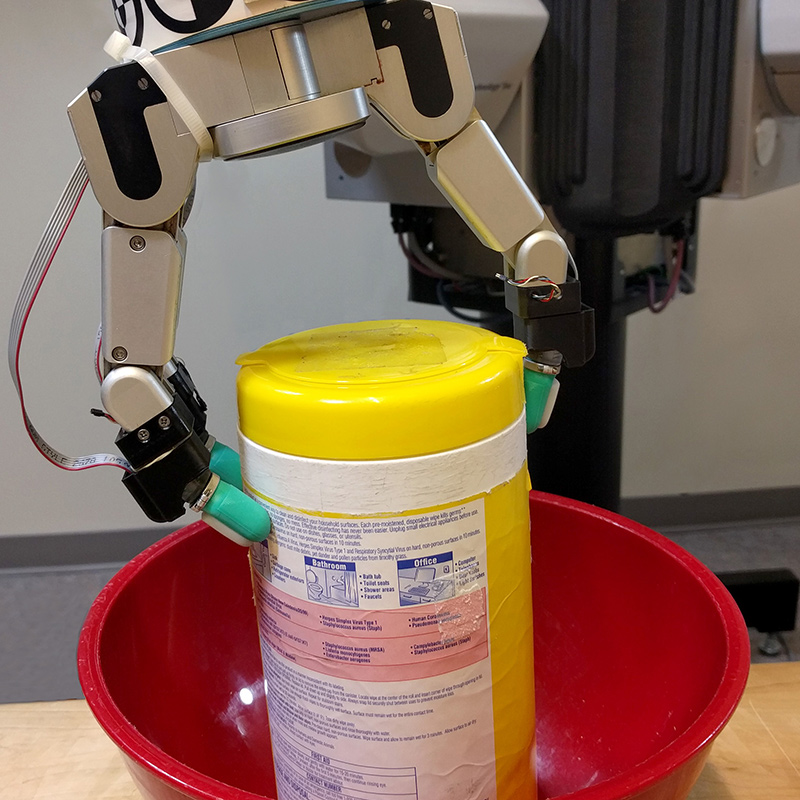

Informative Tactile Sensing

Tactile sensors provide a rich data source for grasping and manipulation problems, but the quality of the data can be influenced in unintuitive ways by choices about how to perform a grasp. This project looked at ways to use machine learning to choose parameters that give us the most useful data. [3]

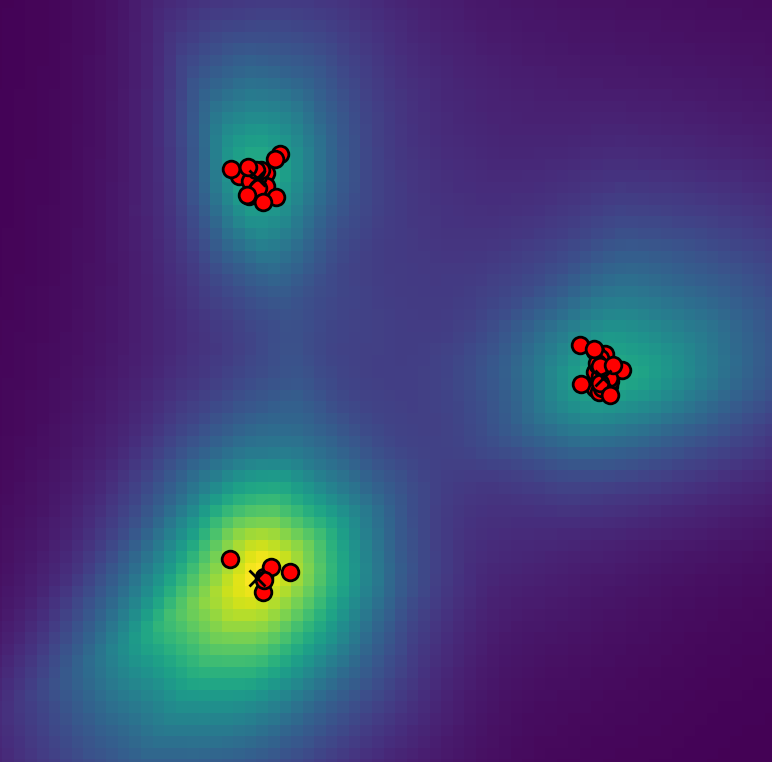

Visualizing Adversarial Learning

I built some code to visualize the training dynamics of generative adversarial networks (GANs), and understand how they succeed and fail on multi-modal data, and how differnt GAN variants can address the issues we see. Although the problem space is simple, I found the visualization to be illuminating in building intuition about the training of neural networks. [Full Article] [GitHub]Publications

Papers:

- [4] Max Pflueger and Gaurav S. Sukhatme. "Plan-Space State Embeddings for Improved Reinforcement Learning." Submitted to IEEE IROS 2020. [arXiv preprint]

- [1] Max Pflueger, Ali Agha, and Gaurav S. Sukhatme. "Rover-IRL: Inverse Reinforcement Learning with Soft Value Iteration Networks for Planetary Rover Path Planning." IEEE Robotics and Automation Letters (RA-L) and ICRA, 2019. [PDF] [BibTeX] [Website]

- [2] Max Pflueger and Gaurav S. Sukhatme. "Solving Task Space Problems with Multi-Step Planning". (Preprint) [PDF]

- [2] Max Pflueger and Gaurav S. Sukhatme. "Multi-Step Planning for Robotic Manipulation". Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), May 2015. [PDF] [BibTeX]

Workshop Papers and Posters:

- [1] Max Pflueger, Ali Agha, and Gaurav S. Sukhatme. "Soft Value Iteration Networks for Planetary Rover Path Planning." Presented Dec. 8, 2017 at the NIPS workshop Acting and Interacting in the Real World: Challenges in Robot Learning. [PDF]

- [1] Max Pflueger and Ali Agha. "Long-Range Path Planning for Planetary Rovers via Imitation Learning and Value Iteration Networks." Presented Oct. 6, 2017 at the SoCal ML Symposium. [Amazon Best Poster Award Honorable Mention]

- [3] Max Pflueger and Gaurav S. Sukhatme. "Inferring Informative Grasp Parameters from Trained Tactile Data Models." Presented June 16, 2016 at the workshop Robot-Environment Interaction for Perception and Manipulation at RSS 2016. [Extended Abstract] [Poster]

- [2] Pflueger, Max and Gaurav S. Sukhatme. "Multi-step Planning for Robotic

Manipulation of Articulated Objects."

Presented June 27, 2013 at the workshop

Combined Robot Motion Planning and AI Planning for Practical

Applications at

RSS 2013.

[PDF] [BibTeX]